Exploratory analysis of multilingual SAE features

Recent research from Anthropic1 suggests that Sparse Autoencoder (SAE) features can be multilingual, activating for the same concept across multiple languages. However, if multilingual features are scarce and not as good as monolingual ones, SAEs could have their robustness undermined, leaving them vulnerable to failures and adversarial attacks in languages not well-represented by the model. In this post, I present findings from an exploratory analysis conducted to assess the degree of multilingualism in SAE features.

This blogpost is part of my project completed during the AI Safety Fundamentals Alignment Course.

Introduction

Sparse autoencoders

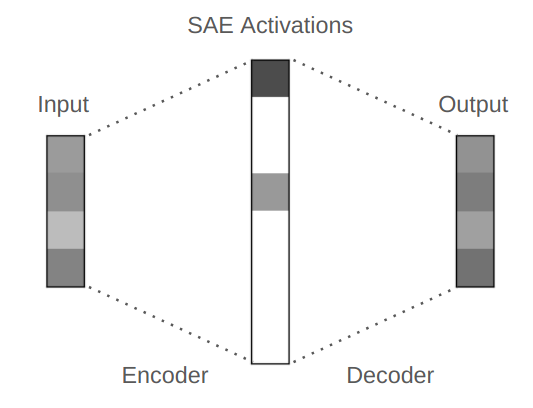

SAEs are a specialized form of autoencoders that generate a sparse representation of vectors. This process typically involves learning two functions, \(f_\text{enc}: \R^{n \times m}\) (encoder) and \(f_\text{dec}: \R^{m \times n}\) (decoder), where \(m\) denotes the number of features, with \(m\) being significantly greater than \(n\)2. More specifically, the decoder is defined as \(f_\text{dec}(\mathbf{f}) = x_\text{dec} + \sum_{i=1}^m f_i \mathbf{d}_i\), where \(\mathbf{f}\) is the vector of features and \(\mathbf{d}_i\) denotes the feature directions.

During the learning phase, the SAE is encouraged to accurately reconstruct the original vector by minimizing the L2 loss associated with the difference between the reconstruction and the original. Additionally, it aims to achieve this using the fewest number of features possible by minimizing the L1 loss on the features. This trade-off between accurate reconstructions and sparse features is balanced through a sparsity coefficient on the L1 loss, defined as a hyperparameter.

Parallel corpora

Parallel corpora are datasets consisting of aligned text pairs in two or more languages, enabling direct mapping between linguistic units across languages. Formally, a parallel corpus can be represented as a set of tuples, \(\{ (s_1, t_1), (s_2, t_2), \dots, (s_k, t_k) \}\), where \(s_i\) and \(t_i\) denote sentences or phrases in source and target languages, respectively. These datasets are critical for tasks like machine translation and cross-lingual transfer learning. An example of parallel corpora is the FLORES-2004, which includes sets of translations for over 200 high- and low-resource languages and will be utilized for extracting sentences for this exploratory analysis.

Setup

I analyzed two SAEs available in the SAELens

library: gpt2-small-res-jb (trained on GPT-2 Small’s

residual stream) and pythia-70m-deduped-res-sm (trained on

Pythia-70M’s residual stream). The GPT-2 SAE has a dictionary size of

24,576 and operates on the hook_resid_pre activation at

each of the model’s 12 layers, while the Pythia SAE uses 32,768 features

and targets the hook_resid_post activation across its 6

layers.

The analysis covered 12 languages from the FLORES-200 dataset: English, German, Russian, Icelandic, Spanish, Portuguese, French, Chinese, Japanese, Korean, Hindi, and Arabic. This selection balances script diversity (Latin, Cyrillic, Hanzi, Hangul, Devanagari, Arabic) and resource availability, ranging from high-resource languages like English to medium-resource ones like Icelandic. Parallel sentences from the FLORES-200 dev split were used, ensuring direct comparability between language pairs. For tokenization, I employed each base model’s native tokenizer.

Activations were computed using TransformerLens to extract residual stream outputs, with SAE reconstructions generated via the SAELens library. For each language and layer, I recorded three metrics: 1) the total activation magnitude per feature, 2) the activation frequency (the number of tokens where a feature was activated), and 3) the reconstruction MSE.

The code for this project can be found at https://github.com/juanbelieni/aisf-project.

Exploratory analysis

Number of tokens

Due to the higher availability of English texts in the training phase of most language models, tokenization tends to produce fewer tokens for sentences in English, while generating many more tokens for other languages, especially those that use a different writing script.

Below you can see this behavior for both models, where English and languages that uses Latin script have fewer tokens produced by the tokenizer. To account for the extra number of tokens produced by the tokenizer, I will normalize some metrics by the number of tokens produced for each language.

Monolingual features

The number of monolingual features, which I will define as features that activate more than 50% of the time for one specific language, is also presented below. Notably, GPT-2 exhibits a high number of English monolingual features, particularly in its last layers. In contrast, the monolingual features in the first layers are dominated by languages with different writing scripts. This distinction indicates that the latent space in GPT-2’s initial layers is structured more around grammatical properties than semantic ones, and the SAE captures this characteristic.

In Pythia, a similar behavior is observed, where English monolingual features are more prevalent in the last layers. However, the overall number of monolingual features is significantly reduced, with a noticeable dip in the middle layers. Additionally, languages such as Japanese, Korean, and Hindi exhibit a similar pattern to English, with more monolingual features concentrated in the final layers.

This suggests that the distribution of monolingual features in SAEs can vary widely, influenced by factors such as the tokenizer, the model architecture, and the dataset. This last dependency is highlighted in Kissane et al.5, where they show that the performance of an SAE in a specific task is influenced by the dataset. In future work, something similar could be done with the problem presented in this post, by training an SAE on a monolingual corpus and another on a multilingual corpus and comparing them.

Another potential visualization is presented below. I plotted the variance in the frequency of features across all languages. As shown, the layers with high variance correspond to those with a greater number of monolingual features, and the overall shape remains the same. This also suggests that SAEs do not encode loads of both monolingual or fully multilingual features, with few intermediate multilingual features. To further validate this, we can plot the distribution…

Multilingual features

On the following tables, I present the language groups with the highest number of features that activate at least 5% of the time for each language in the group.

For GPT-2 (in the table below), we can see that the first layers have more features focused on languages with non-Latin scripts (as we have already seen), while the following layers have more features focused on languages with Latin scripts (both mono- and multilingual).

| Layer | 1st | 2nd | 3rd | 4th | 5th |

|---|---|---|---|---|---|

| 0 | Arabic, Hindi, Korean, Russian | Chinese, Japanese | Hindi, Korean, Russian | English, French, German, Icelandic, Portuguese, Spanish | Hindi |

| 1 | Chinese, Japanese | Hindi | English, French, German, Icelandic, Portuguese, Spanish | English, French, German, Portuguese, Spanish | Japanese |

| 2 | Chinese, Japanese | Hindi | English, French, German, Icelandic, Portuguese, Spanish | English, French, German, Portuguese, Spanish | English, French, Portuguese, Spanish |

| 3 | Chinese, Japanese | English, French, German, Icelandic, Portuguese, Spanish | English, French, German, Portuguese, Spanish | English | English, French, Portuguese, Spanish |

| 4 | English | English, French, German, Portuguese, Spanish | English, French, German, Icelandic, Portuguese, Spanish | Chinese, Japanese | English, French, Portuguese, Spanish |

| 5 | English | English, French, German, Icelandic, Portuguese, Spanish | English, French, German, Portuguese, Spanish | English, French, Portuguese, Spanish | French, German, Icelandic, Portuguese, Spanish |

| 6 | English | English, French, German, Portuguese, Spanish | English, French, German, Icelandic, Portuguese, Spanish | English, French, Portuguese, Spanish | French, German, Icelandic, Portuguese, Spanish |

| 7 | English | English, French, German, Portuguese, Spanish | English, French, German, Icelandic, Portuguese, Spanish | English, French, Portuguese, Spanish | English, German |

| 8 | English | English, French, German, Portuguese, Spanish | English, French, German, Icelandic, Portuguese, Spanish | English, French, Portuguese, Spanish | English, French |

| 9 | English | English, French, German, Portuguese, Spanish | English, French, German, Icelandic, Portuguese, Spanish | English, French, Portuguese, Spanish | English, French |

| 10 | English | English, French, German, Portuguese, Spanish | English, French | English, French, Portuguese, Spanish | English, German |

| 11 | English | English, French | English, German | English, French, Portuguese, Spanish | English, French, German, Portuguese, Spanish |

The results for Pythia also follow what we would expect based on the previous visualizations, where the first and last layers have more monolingual features focused on English. However, the SAE for layer 2 has a surprisingly high amount of full multilingual features, with the first language group containing all the languages I tested.

Pythia:

| Layer | 1st | 2nd | 3rd | 4th | 5th |

|---|---|---|---|---|---|

| 0 | English | English, French, German, Icelandic, Portuguese, Spanish | English, French, German, Portuguese, Spanish | Spanish | German |

| 1 | English, French, German, Icelandic, Portuguese, Spanish | English | English, French, German, Portuguese, Spanish | English, French, Portuguese, Spanish | English, French, German, Portuguese, Russian, Spanish |

| 2 | Arabic, Chinese, English, French, German, Hindi, Icelandic, Japanese, Korean, Portuguese, Russian, Spanish | Arabic, Chinese, French, German, Hindi, Icelandic, Japanese, Korean, Portuguese, Russian, Spanish | English | English, French, German, Portuguese, Spanish | English, French, German, Icelandic, Portuguese, Spanish |

| 3 | English, French, Portuguese, Spanish | English, French, German, Icelandic, Portuguese, Spanish | English, French, German, Portuguese, Spanish | English | Portuguese |

| 4 | English | English, French, Portuguese, Spanish | English, French, German, Portuguese, Spanish | English, French, German, Icelandic, Portuguese, Spanish | English, French |

| 5 | English | English, French | Chinese, English, Japanese | English, Japanese | English, French, Portuguese, Spanish |

Conclusion

The exploratory analysis conducted on two SAE models (GPT-2 and Pythia) across 12 languages revealed several insights into the multilingual capabilities of SAE features. Both models exhibited a dominance of monolingual features, particularly for English, with these features being more prevalent in later layers. Conversely, features for languages using non-Latin scripts were more common in earlier layers, suggesting that SAEs may capture different types of linguistic information at different depths of the network.

The analysis also highlighted a clear layer-wise progression in feature specialization. Early layers tended to capture language-agnostic or script-specific features, while later layers became increasingly specialized for English and other Latin-script languages. This suggests that SAEs may organize linguistic information hierarchically, with lower layers encoding more general features and higher layers encoding more language-specific or semantic features.

While the study demonstrated that some features are shared across languages, the distribution of multilingual features was not uniform. Layers with higher variances in feature activation frequencies corresponded to those with more monolingual features, indicating that SAEs may not inherently encode a large number of fully multilingual features. Instead, the models appear to rely on a mix of monolingual and partially multilingual features, which could have implications for their robustness and generalization across languages.

These results highlight the importance of understanding how SAEs encode linguistic information, especially in multilingual contexts. If multilingual features are scarce or less effective than monolingual ones, SAEs may struggle with robustness and generalization across languages, potentially making them vulnerable to failures or adversarial attacks. This raises critical questions about the design of SAEs for multilingual models and the need to ensure that they can effectively capture concepts across diverse linguistic representations.

Future Work

Investigating dataset influence. The distribution of monolingual and multilingual features observed in this study may be influenced by the training data of the base models. For instance, GPT-2, being trained primarily on English text, exhibits a strong bias toward English features. Similarly, Pythia, which may have been trained on a more diverse dataset, shows a different distribution of monolingual and multilingual features. Future work could involve training SAEs on monolingual versus multilingual corpora and comparing the resulting feature distributions. This would help clarify whether the observed patterns are inherent to the SAE architecture or are shaped by the training data.

Exploring language groupings. The language groupings identified in this study (e.g., Latin-script languages vs. non-Latin-script languages) suggest that SAE features may cluster around linguistic or typological similarities. Future work could explore this hypothesis further by analyzing whether languages with similar grammatical or typological properties share more features. For example, languages with similar word orders or morphological structures could be expected to share more features in certain layers. This could involve expanding the analysis to include more diverse language families and testing for correlations between feature sharing and linguistic properties.

Adversarial robustness in multilingual contexts. The findings of this study raise concerns about the robustness of SAEs in multilingual contexts. If certain languages are underrepresented in the feature space, models may be more vulnerable to adversarial attacks or failures in those languages. Future work could investigate the susceptibility of SAEs to adversarial perturbations in underrepresented languages and explore strategies for mitigating these vulnerabilities. For example, techniques such as adversarial training or feature regularization could be used to promote more balanced feature distributions across languages.

Developing metrics for multilingual feature evaluation. The analysis in this study relied on simple metrics such as feature activation frequency and monolingual feature counts. While these metrics provided valuable insights, they may not fully capture the complexity of multilingual feature sharing. Future work could involve developing more sophisticated metrics for evaluating the degree of multilingualism in SAE features, such as multilingual feature density or cross-lingual feature alignment. These metrics could help quantify the extent to which features are shared across languages and identify areas where models may be underperforming.